While working on optimizing connectivity for whitening (see below) I remembered that it can be useful to decompose connectivity matrices relating neurons into components relating pseudo-neurons. In this post, I’ll show how this can be done, and highlight its application to the whitening problem.

I will assume that our $N \times N$ connectivity matrix $W$ is square. It could represent e.g. the recurrent connectivity of a collection of $N$ neurons. Using singular value decomposition, we can write our connectivity $$ W = U S V^T = \sum_{i=1}^N \sigma_i u_i v_i^T,$$

where $u_i$ and $v_i$ are the left and right singular vectors (columns of $U$ and $V$), and $\sigma_i$ are the singular values contained in the diagonal matrix $S$.

Now suppose we’re interested in a particular subspace of the full $N$-dimensional activity space, with orthonormal basis $P$. We combine this with an orthonormal basis $Q$ for the orthogonal complement of this space to get a basis for $R^N$. We can then decompose any neural activity vector $$ x = PP^T x + QQ^T x = P\tilde{x}_P + Q\tilde{x}_Q,$$ where the tilde indicates projection into the corresponding space. One way to think about this is to view the elements of $\tilde{x}_P$ and $\tilde{x}_Q$ as the activations of pseudo-neurons corresponding to the patterns of population activity in the columns of $P$ and $Q$. The expression above then decomposes the population activity vector $x$ in terms of the contributions from these pseudo-neurons.

We can also describe the connectivity in terms of the connectivities of the pseudo-neurons. Applying our decomposition to the singular vectors of $W$ we get

$$\begin{align}

W &= \sum_{i=1}^N \sigma_i (P \tilde{u_i}_P + Q \tilde{v_i}_Q)(\tilde{u_i}_P^T P^T + \tilde{v_i}_Q^T Q^T)\\ &= P \left(\sum_{i=1}^N \sigma_i \tilde{u_i}_P \tilde{v_i}_P^T\right) P^T + P \left(\sum_{i=1}^N \sigma_i \tilde{u_i}_P \tilde{v_i}^T_Q\right) Q^T\\ &+ Q \left(\sum_{i=1}^N \sigma_i \tilde{u_i}_Q \tilde{v_i}^T_P\right) P^T+ Q \left(\sum_{i=1}^N \sigma_i \tilde{u_i}_Q \tilde{v_i}^T_Q\right) Q^T\\ &= P \widetilde{W_{PP}} P^T + P \widetilde{W_{PQ}} Q^T + Q \widetilde{W_{QP}} P^T + Q \widetilde{W_{QQ}} Q^T\\

&= W_{PP} + W_{PQ} + W_{QP}+ W_{QQ}.

\end{align}

$$ The matrices with tildes are the connectivities of the pseudo-neurons. We have now expressed our original connectivity matrix in terms of contributions from all four pairs of pseud-neuron populations.

A very useful property of this decomposition is that the connectivity components are orthogonal, because either the input space or the output space of any pair of component connectivities are orthogonal. For example, $W_{PP}$ and $W_{PQ}$ share the same output space (with basis $P$) but have orthogonal input spaces($P$, vs. $Q$), so $$\begin{align} \langle W_{PP}, W_{PQ} \rangle &= \tr(W^T_{PP} W_{PQ}) \\ &= \tr(W_{PQ} W^T_{PP})\\ &= \tr(P \wt{W_{PQ}} Q^T P \wt{W_{PP}} P^T) \\ &= \tr(P \wt{W_{PQ}} 0 \wt{W_{PP}} P^T) \\&= \tr(0)=0.\end{align}$$

This orthogonality is useful because it means that the squared Frobenius norm of the connectivity is the sum of those of its components,$$\|W\|_F^2 = \|W_{PP}\|_F^2 + \|W_{PQ}\|^2_F + \|W_{QP}\|_F^2+ \|W_{QQ}\|_F^2.$$ And that is useful because if e.g. you’re minimizing an objective that depends on only one connectivity component (see e.g the application below), penalizing the sum-of-squares of the synaptic weights i.e. the squared Frobenius norm, will automatically send the other components to zero.

The connectivity decomposition simplifies for symmetric $W$ if we pick $P$ and $Q$ appropriately. When $W$ is symmetric it has eigendecomposition $W = U S U^T$, with orthonormal $U$ and diagonal $S$ containing the real-valued eigenvalues. If $P$ and $Q$ are disjoint columns of $U$, $$ U = [P|Q] \implies W = P S_P P^T + Q S_Q Q^T = W_{PP} + W_{QQ},$$ where $S_P$ and $S_Q$ are the subset of the eigenvalues corresponding to the subsets of the columns of $U$ in $P$ and $Q$, respectively. In this case, $$ \wt{W}_{PP} = S_P, \quad \wt{W}_{QQ} = S_Q, \quad \wt{W}_{PQ} = \wt{W}_{QP} = 0.$$

An application

The application that inspired this note was finding connectivity for whitening, which means transforming activity to make the output covariance proportional to the identity. In other words, it means both decorrelation (off-diagonal covariances are zero) and variance equalization (diagonal elements are equal).

We have some input activity in the neurons $\times$ odours matrix $X$. We linearly transform it into output activity $Y$ using a connectivity matrix $Z$, $$Y = ZX.$$ We’re looking for connecitivity $Z$ that whitens our pattern covariances $Y^TY = X^T Z^T Z X$. We therefore formulate the search for connectivity that whitens as solving the optimization problem $$\argmin_Z \;\| X^T Z^T Z X – I\|_F^2.$$ This problem only constrains $Z$ up to rotation, since the objective depends on $Z$ only through $Z^T Z$. Furthermore, in our setting, we have additional redundancy because we have more neurons than odours. We want to understand the full set of solutions.

To do so, we use SVD and our connectivity decomposition above. Let $$ X = U S V^T, \quad Z = P R Q^T, \quad Z^T Z = Q R^2 Q^T \triangleq W.$$ Then the first term in the norm is $$ X^T Z^T Z X = X^T W X = V S U^T W U S V^T = V S \wt{W}_{UU} S V^T.$$ So we see immediately that our objective only relates to the $W_{UU}$ component of $W$ via the recurrent connectivity $\wt{W}_{UU}$ of the $U$ subpopulation of pseudo-neurons.

To whiten, we need $X^T Z^T Z X = V S \wt{W}_{UU} SV^T = I$, which implies $$\wt{W}_{UU} = S^{-2} \implies W_{UU} = U \wt{W}_{UU}U^T = U S^{-2} U^T.$$ The other connectivity components of $W$ are unconstrained and can be arbitrary. That is, we can pick some completion $V$ of the basis $U$, set the corresponding pseudo-neuron connectivities $\wt{W}_{UV}$, $\wt{W}_{VU}$ and $\wt{W}_{VV}$ randomly, and $W$ will still whiten. However, we know additionally that $W = Z^TZ$ is positive semi-definite, and so has an eigendecomposition with orthonormal eigenvectors and real-valued non-negative eigenvalues. Whitening determines some of these eigenvectors ($U$) and eigenvalues ($S^{-2}$). We can therefore parameterize all possible solutions $W$ as $$ W = U S^{-2} U^T + A D^2 A^T = W_{UU} + W_{AA}.$$ where $A$ is an arbitrary orthonormal completion of $U$, and $D$ is an arbitrary vector of real-valued eigenvalues. This then determines the right eigenvectors $Q$ and singular values $R$ of $Z$, since $$ W = Z^T Z = Q R^2 Q \implies Q = [U | A], \; R^2 = [\diag{S^{-2}} | \diag{D^2}],$$ where the square brackets indicate concatenation of columns in the first case, and of vectors in the second.

This still leaves the left eigenvectors of $Z$ unspecified, so $$ Z = P R Q ^T = P [\diag{S^{-1}} | \diag{D}] [U | A]^T.$$ That is, we can pick an orthonormal set of left eigenvectors $P$ arbitrarily. However, if we require that $Z$ be symmetric, then the left and right eigenvectors are the same and $$Z = Q R Q^T = [U | A] [\diag{S^{-1}} | \diag{D}] [U | A]^T = \sqrt{W}.$$

Summary

Connectivity matrices relating neurons can be decomposed into the sum of contributions of connectivities relating pseudo-neurons. This can help simplify problems, indicate the component connectivities involved, and characterize solutions.

Code

First some code for orthogonalizing column spaces and completing bases.

# Return an orthonormal basis for the columns of X

orth = lambda X: np.linalg.svd(X, full_matrices=False)[0]

def complete_basis(X):

# Return a basis for the orthogonal complement of the columns of X

m, n = X.shape

assert m > n, "X must have at least as many columns as rows"

U = orth(X)

Y = np.random.randn(m, m-n)

Y -= U @ (U.T @ Y)

return orth(Y)

Let’s check that it works.

N = 10

M = 4

U = np.linalg.qr(np.random.randn(N, N))[0][:, :M]

V = complete_basis(U)

assert allclose(U.T @ U, np.eye(M))

assert allclose(U.T @ V, np.zeros((M, N-M)))

assert allclose(V.T @ V, np.eye(N-M))

We can decompose an activity vector $x$ in the $[U, V]$ basis.

x = randn(N,)

assert allclose(U @ U.T @ x + V @ V.T @ x, x)

We can decompose connectivity as well.

random.seed(6)

W = np.random.randn(N, N)

Wu_ = U.T @ W @ U; Wu = U @ Wu_ @ U.T

Wv_ = V.T @ W @ V; Wv = V @ Wv_ @ V.T

Wuv_ = U.T @ W @ V; Wuv = U @ Wuv_ @ V.T

Wvu_ = V.T @ W @ U; Wvu = V @ Wvu_ @ U.T

assert allclose(W, Wu + Wv + Wuv + Wvu)

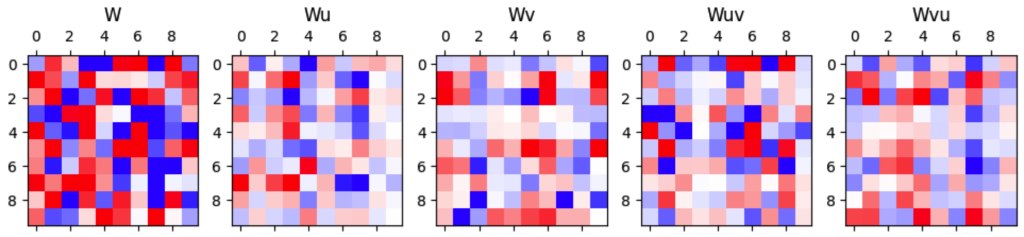

Let’s see what the connectivity components look like.

figure(figsize=(12, 4))

for i, (Wi, ttl) in enumerate(zip([W, Wu, Wv, Wuv, Wvu],

["W", "Wu", "Wv", "Wuv", "Wvu"])):

subplot(1,5,i+1);

matshow(Wi, fignum=False, vmin=-1,vmax=1,cmap=cm.bwr);

title(ttl)

Generate some random correlated input $X$ and transform it to whitened $Y$ using connectivity $Z$.

random.seed(6)

X = (eye(N)*(1-0.5) + 0.5) @ np.random.randn(N, M)

Ux, Sx, Vx = np.linalg.svd(X, full_matrices=False); Vx = Vx.T

Z = orth(randn(*Ux.shape)) @ np.diag(1/Sx) @ Ux.T

Y = Z @ X

assert allclose(Y.T @ Y, eye(M))

$Y^T Y = X^T Z^T Z X = X^T W X$. But what matters for whitening is $W_u$.

Wu = Ux @ diag(Sx**-2) @ Ux.T

assert allclose(X.T @ Wu @ X, eye(M))

The other components of $W$ can be arbitrary.

Vw = complete_basis(Ux) # A random completion

Wv = Vw @ randn(*[Vw.shape[1]]*2) @ Vw.T

Wuv= Ux @ randn(Ux.shape[1], Vw.shape[1]) @ Vw.T

Wvu= Vw @ randn(Vw.shape[1], Ux.shape[1]) @ Ux.T

W = Wu + Wv + Wuv + Wvu

assert allclose(X.T @ W @ X, eye(M))

Since $W$ is symmetric we can write it as $Ux Sx^{-2} Ux^T + V R V^T$.

Since $X’ W X$ only constrains $W_u$, $V$ can be an arbitrary completion,

and $R$ an arbitrary diagonal matrix of positive values.

Vw = complete_basis(Ux) # A random completion

R = rand(Vw.shape[1])

W = Ux @ diag(Sx**-2) @ Ux.T + Vw @ diag(R) @ Vw.T

assert allclose(X.T @ W @ X, eye(M))

Collect the eigenvectors and eigenvalues of $W$.

UW = hstack([Ux, Vw])

SW = hstack([Sx**-2, R])

assert allclose(UW @ diag(SW) @ UW.T, W)

$Z = U_z S_z V_z^T$, but $W = Z^T Z$ only determines $V_z$ and $S_z$; $U_z$ can be arbitrary for whitening.

VZ = UW

UZ = orth(randn(*UW.shape))

SZ = sqrt(SW)

Z = UZ @ diag(SZ) @ VZ.T

assert allclose(X.T @ Z.T @ Z @ X, eye(M))

If we require that $Z$ is symmetric, then $U_z = V_z$.

Z = VZ @ diag(SZ) @ VZ.T

assert allclose(Z, Z.T)

assert allclose(X.T @ Z.T @ Z @ X, eye(M))

Leave a Reply