I need to invert a matrix of the form $$ M = I + S^2 H S^2,$$ where $H$ is a symmetric matrix, and $S^2$ is diagonal. The elements of $S^2$ drop off very quickly, so what remains of $H$ are its first column and first row, scaled by $S_{1}^2 S^2$. The result is that $M$ looks, appropriately enough on the day after the Chiefs won the SuperBowl, like an arrowhead, consisting of a diagonal, the leftmost column, and the topmost row. We’re going to invert such matrices.

Computing the inverse elementwise.

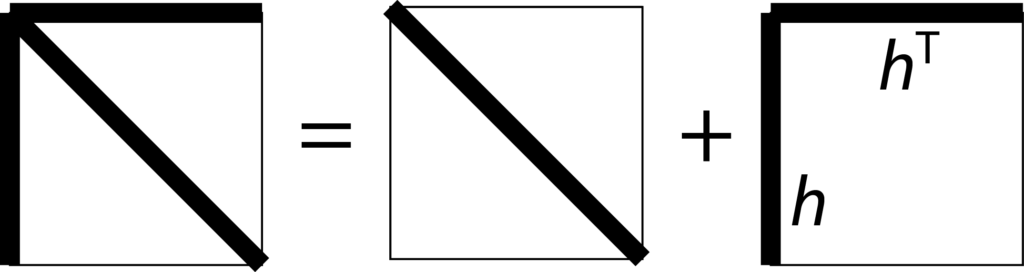

To invert the matrix elementwise, we represent it as in the figure above, as consisting of the sum of a diagonal matrix and a ‘corner’ matrix made of the same vector $h$ as the leftmost column and the topmost row. That is,

$$ M_{ij} = \delta_{ij} + \delta_{i1}h_j + h_i\delta_{1j} – h_{1} \delta_{i1}\delta_{1j},$$ where the last term avoids double counting the term at the top-left corner.

The inverse $G$ satisfies $\sum_{k} M_{ik} G_{kj} = \delta_{ij}.$ Then \begin{align}\delta_{ij} &= \sum_k M_{ik} G_{kj}\\

&= \sum_k (\delta_{ik} + h_i \delta_{1k} + \delta_{i1}h_k – \delta_{i1}\delta_{1k}h_{1})G_{kj}\\

&= G_{ij} + h_i G_{1j} + \delta_{i1}\sum_k (h_k – \delta_{1k} h_{1})G_{kj} \\

&= G_{ij} + h_i G_{1j} + \delta_{i1}\left(-h_{1} G_{1j} + \sum_k h_k G_{kj}\right)\\&= G_{ij} + h_i G_{1j} + \delta_{i1} \sum_{k>1} h_k G_{kj}.

\end{align}

Let’s first consider the case where $i>1$. Then

\begin{align} G_{ij} &= \delta_{ij} – h_i G_{1j}.

\end{align} Let’s define $g_j = G_{1j}$. Then rows below the top look like $I – h g^T$.

Now let’s consider the case where $i=1$. Then

\begin{align}

\delta_{1j} &= g_j + h_1 g_j + \sum_{k>1} h_k G_{kj}\\

&= (1 + h_1)g_j + \sum_{k>1}h_k (\delta_{kj} – h_k g_j)\\&= (1 + h_1 + h_1^2 – \|h\|_2^2)g_j + (j>1) h_j.\end{align} So, defining $$h_0 \triangleq 1 + h_1 + h_1^2 – \|h\|_2^2$$ we get \begin{align} g_1 &= {1 \over h_0}, \quad g_{k>1} = -{h_k \over h_0}.\end{align} Putting it altogether we arrive at

$$G = I – e_1 e_1^T + ((1+h_1)e_1 – h)g^T.$$ The $I – e_1 e_1^T$ term covers the required identity term when $i>1$, Here the $e_1 e_1^T$ terms subtracts the top-left 1 from the identity matrix, and the coefficients of $g^T$ are such that the first is $1$, and the remainder are $-h_2, -h_3, \dots$

In fact, we notice that the latter vector forms the numerator of $g$. Therefore, we assign it its own symbol

$$\tilde h \triangleq [1, -h_2, -h_3, ..],$$ in terms of which $g = \tilde h/h_0,$ and

$$ \boxed{G = I – e_1 e_1^T + {\tilde h \tilde h^T \over h_0}.}$$

A Colab notebook that verifies this expression is here.

█

Leave a Reply